Aerospace manufacturers developing increasingly critical embedded applications – air traffic control and other airborne electronic systems – can’t afford to take chances on the security of their software code. These connected systems present multiple attack surfaces that are vulnerable to bad actors. Defending against such attacks can be a daunting but required task.

Often, security critical embedded software is developed first and tested later, invariably creating insecure code that puts people and property at risk. A key step in creating secure systems is ensuring security is built into the software development life cycle in accordance with DO-326, which includes design, implementation, verification, and validation in parallel with the DO-178C functional safety standard for aerospace. This shift left, test early approach results in lower costs and fewer headaches than reactive testing and securing code verification later.

To produce high-quality code and improve the security of these connected embedded devices with this method, consider these best practices.

1. Establish requirements at the outset

Undocumented requirements lead to miscommunication and create rework, changes, and bug fixes, as well as security vulnerabilities. To ensure smooth project development, all parts of the product and its development process must be understood by every team member in the same way. Clearly defined functional and security requirements help ensure this.

2. Provide bidirectional traceability

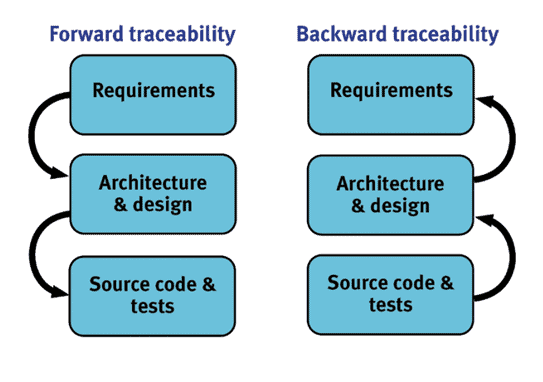

Automation makes it easier to maintain bidirectional traceability (forward and backward) in a changing project environment.

Forward traceability demonstrates that all requirements are reflected at each stage of the development process, including implementation and test. The impact of changed requirements or failed test cases can be assessed by applying impact analysis, which can then be addressed. The resulting implementation can then be retested to present evidence of continued adherence to the principles of bidirectional traceability.

Equally important is backward traceability, which highlights code that fulfills none of the specified requirements. Oversight, faulty logic, feature creep, and malicious backdoor methods can all introduce security vulnerabilities or errors. Automated traceability can isolate what’s needed and enable automatic testing of only affected functions.

3. Use a secure language subset

For development in C or C++, research shows roughly 80% of software defects stem from the incorrect use of nearly 20% of the language. Developers should use language subsets that improve safety and security by disallowing problematic constructs.

Two common subsets are MISRA C and Carnegie Mellon Software Engineering Institute (SEI) CERT C, both of which help developers produce secure code. The standards have similar goals from a security perspective but implement them differently. The biggest difference is the degree to which they demand adherence.

Developing code with MISRA C results in fewer coding errors because it has stricter, more decidable rules defined on the basis of first principles. The ability to quickly and easily analyze software with reference to MISRA C coding standards can improve code quality and consistency and reduce time to deployment. By contrast, when developers need to apply rules to code retrospectively, CERT C may be pragmatic. Analyzing code against CERT C identifies common programming errors behind most software security attacks.

Applying MISRA C or CERT C results in more secure code. Manual enforcement of such standards on a code base of any significant size is not practical, so a static analysis tool is required to automate the job.

4. Adhere to a security- focused process standard

In safety-critical sectors, such standards frequently complement those focused on functional safety. For example, in the automotive space, J3061 “Cybersecurity Guidebook for Cyber-Physical Vehicle Systems” (soon to be superseded by ISO/SAE 21434 “Road Vehicles – Cybersecurity Engineering”) complements the automotive ISO 26262 functional safety standard. Automated development tools can be integrated into developer workflows for security-critical systems and can accommodate functional safety demands concurrently.

5. Automate static and dynamic testing processes

Static analysis is a collective name for test regimes involving automated inspection of source code. By contrast, dynamic analysis involves executing some or all source code. The focus of such techniques on security issues results in static analysis (or application) security testing (SAST) and dynamic analysis (or application) security testing (DAST).

There are wide variations within these groupings. For example, penetration, functional, and fuzz tests are all black-box DASTs that don’t require access to source code to function. Black-box DASTs complement white-box DASTs, which include unit, integration, and system tests to reveal vulnerabilities in application source code through dynamic analysis.

6. Test early and often

All security-related tools, tests, and techniques described here have a place in each life cycle model. In the V model, they are largely analogous and complementary to the processes usually associated with functional safety application development.

In the DevSecOps model, the DevOps life cycle is superimposed with security-related activities throughout the continuous development process.

Requirements traceability is maintained throughout the development process in the case of the V-model, and for each development iteration in the case of the DevSecOps model (shown in orange in each figure).

Some SAST tools are used to confirm adherence to coding standards, ensure that complexity is minimized, and check that code is maintainable. Others are used to check for security vulnerabilities but only to the extent that such checks are possible on source code without an execution environment.

White-box DAST enables compiled and executed code to be tested in the development environment or, better still, on the target hardware. Code coverage facilitates confirmation that all security and other requirements are fulfilled by the code, and that all code fulfils one or more requirements. These checks can even go to the level of object code if the system requires it.

Robustness testing can be used within the unit test environment to demonstrate that specific functions are resilient, whether in isolation or in the context of their call tree. Fuzz and penetration black-box testing techniques traditionally associated with software security remain valuable, but in this context are used to confirm and demonstrate the robustness of a system designed and developed with security.

7. Use automated development tools

To facilitate the creation of secure embedded software, developers should have access to automated software development tools to support their work through the entire software development life cycle – from traceability and engineering to static and dynamic software analysis to unit and integration testing. The accuracy, determinism, and formal reporting capabilities of these tools meet assurance requirements for the development of reliable, security-critical software for connected aerospace devices.

Following these best practices associated with the shift left, test early approach helps produce high quality code and improve the security of connected embedded devices that have brought such seismic changes to the industry overall.

Liverpool Data Research Associates (LDRA)

Explore the July 2021 Issue

Check out more from this issue and find your next story to read.

Latest from Aerospace Manufacturing and Design

- Upcoming webinar to highlight the rise of electric vehicles

- JEKTA, ZeroAvia partner on hydrogen-electric amphibious aircraft

- Mastercam 2025 software

- IMTS 2024 Conference: Cutting Edge Innovations: Maximizing Productivity and Best Practices with Superabrasives

- Eve Air Mobility unveils first full-scale eVTOL prototype

- Dillon Manufacturing's Fast-Trac Jaw Nuts

- IMTS 2024 Conference: Breaking the Tradeoff: Utilizing Deep Learning AI with X-ray Computed Tomography for Unparalleled Clarity and Speed

- #47 - Manufacturing Matters - The Ins and Outs of CMMC 2.0 with Smithers Information Security Services